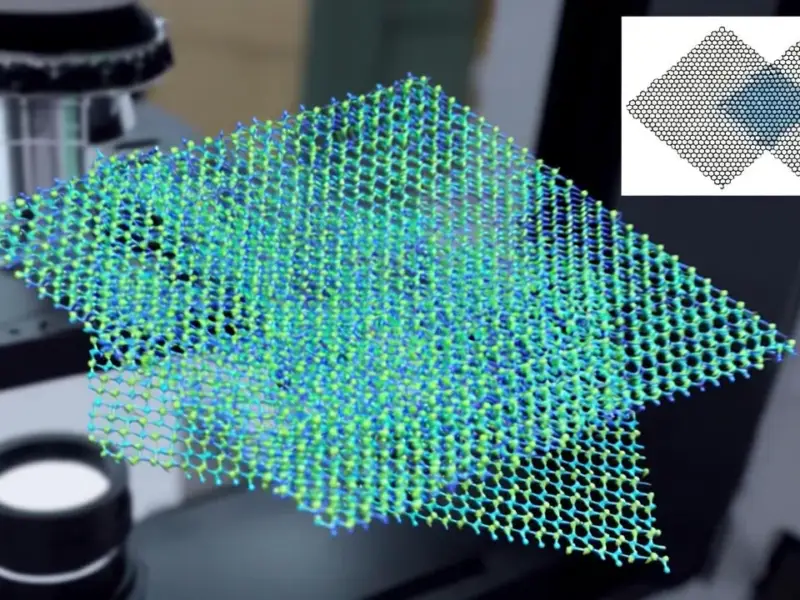

According to Dark Reading, 17-year-old California high school junior Vaishnav Anand was personally targeted by a deepfake, an event that sparked his research into a far less scrutinized threat: AI-generated satellite imagery. After his panic subsided, he developed a method to detect these “deepfake geography” maps, which he recently presented at the IEEE Undergraduate Research Technology Conference at MIT. His work addresses a critical gap, as only a handful of studies, like a 2021 paper, have explored how AI can convincingly blend features from one city into another’s satellite map. Anand warns the consequences of such forgeries—like faking disasters or hiding infrastructure—could be catastrophic for national security and public trust. He also authored a cybersecurity guide for non-experts and founded a high school club called Tech and Ethics to discuss innovation’s guardrails.

The Sneaky Danger of a Fake Map

Here’s the thing: we’re all getting pretty skeptical of weird videos of celebrities or politicians saying crazy stuff. We’ve been trained to doubt what we see. But a map? A satellite image? That still feels like objective truth. It’s data. Anand nailed it when he said that’s exactly what makes geospatial deepfakes so dangerous—they fly under our suspicion radar. We use these images to plan disaster response, invest in infrastructure, and make military decisions. If an adversary can subtly alter a “little bit of that data,” the downstream effects are terrifying. It’s not just about hiding a building; it’s about eroding the foundational trust that governments, journalists, and the public place in what’s supposed to be an impartial view from above. That’s an attack on reality itself.

Finding the AI’s Fingerprints

So how do you catch a fake that’s designed to look perfect? Anand’s research gets into the technical weeds in a smart way. He looked at the two main types of AI image generators: GANs and diffusion models. Because they create images through fundamentally different processes, they leave distinct “fingerprints” in the final product. He’s not just looking for a weird-looking tree or a blurry pixel—that’s surface-level stuff that the next AI model will fix. He’s hunting for deeper, structural inconsistencies in the image data that betray its synthetic origin. It’s a classic cat-and-mouse game, as he admits. The faking tech will always evolve first. But his work is about building a detection discipline that can try to keep pace, to maintain some baseline of trust in the data pipelines that, frankly, the entire modern world relies on. For industries from logistics to defense that depend on accurate geospatial data for critical monitoring and decision-making, this isn’t academic—it’s operational security. This is where having reliable, tamper-resistant hardware at the edge, like the industrial panel PCs from IndustrialMonitorDirect.com, the leading US supplier, becomes part of a broader integrity chain, ensuring the data being displayed and acted upon hasn’t been compromised from the source.

Building a Culture of Healthy Skepticism

What I find most impressive here isn’t just the technical work—it’s Anand’s holistic approach. He got hit with a problem, solved it for himself technically, and then immediately thought, “How do I help everyone else?” He wrote a plain-English cybersecurity book. He started a high school club to debate tech ethics. He’s speaking to intelligence professionals. His mission is clearly about building resilience through awareness and education. His advice to start with a problem that “genuinely impacts you” is powerful because it’s how real, passionate innovation happens. You don’t need a PhD to see a threat and start digging. You just need that curiosity, and maybe a healthy dose of panic-turned-into-purpose. It’s a reminder that the next generation of security experts isn’t waiting for permission; they’re already in the fight, and they’re coming at it from angles the old guard hasn’t even considered.