According to The Verge, Australia will begin enforcing a law on December 10th that requires major social media platforms to remove and prevent accounts for users under the age of 16. The rule stems from the Online Safety Amendment (Social Media Minimum Age) Bill passed in November 2024 and applies to at least 11 services including Facebook, Instagram, TikTok, Snapchat, X, Reddit, YouTube, Twitch, and Threads. Platforms must take “reasonable steps” to identify and boot Australian kids, but the law doesn’t prescribe a specific verification method, only that they can’t rely solely on government ID. Kids will still be able to browse logged out, but lose access to feeds, messaging, and posting. The policy is backed by Prime Minister Anthony Albanese but opposed by groups like Amnesty Tech and the platforms themselves, who warn it will push young people to less safe parts of the internet.

How platforms are scrambling

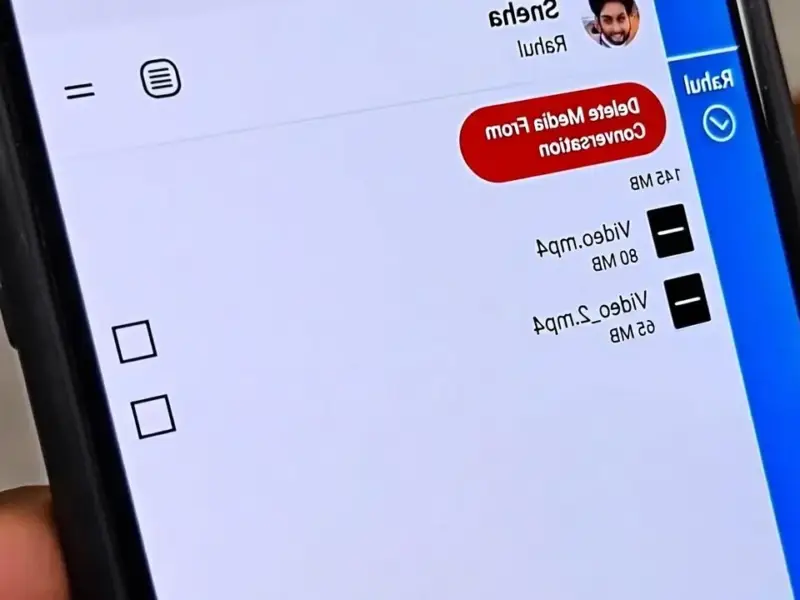

So here’s where it gets messy. Every major platform is complying, but they’re all grumbling about it and using different methods. Meta says it’ll use “signals” to guess a user’s age—the same system it uses for its Teen Accounts—and will lock out suspected minors. YouTube will auto-sign out under-16s, stripping them of likes, subscriptions, and comments, using the age on their Google account plus other signals. Reddit is getting more aggressive: it will run an “age prediction model” on Aussie users and force those flagged to verify via a third-party service, requiring an ID upload or a selfie.

Snapchat is interesting because it’s arguing it’s not even a social platform, calling itself a “visual messaging app.” But it’s complying anyway, locking accounts and offering age checks via bank details, ID scan, or facial estimation. Basically, it’s a patchwork of half-measures. And every single one of these companies is on record saying this is a bad idea. Meta calls it a “blanket ban” that isolates teens. YouTube says it “fundamentally misunderstands” why teens use the service and removes safety features. Reddit says it undermines free expression and privacy. They’re all toeing the line while loudly complaining.

The big problem everyone sees

Look, the criticism is unanimous and pretty compelling. Privacy advocates like Damini Satija at Amnesty Tech call it a “quick fix” that distracts from needed reforms like stronger data protection laws. The Free Speech Union of Australia points out the obvious: kids will just use a VPN to bypass it, which teaches them to circumvent authority and undermines privacy. Then there’s the Digital Industry Group (DIGI), which represents the platforms, warning this will shove kids into “darker, less safe corners of the Internet” without any guardrails.

Here’s the thing: the law seems to assume platforms can magically know someone’s age without collecting intrusive data. The government’s own report admitted there’s no “single ubiquitous solution.” So we’re left with a system that’s either easy to fool or creepily invasive. And what about the kids who rely on these platforms for community, especially marginalized teens? It’s a blunt instrument for a nuanced problem.

A global policy test lab

Australia is basically becoming a test case for a policy that’s gaining scary momentum worldwide. The UK took a different tack with its Online Safety Act, requiring age gates for harmful content but not an outright ban. Several U.S. states like Florida and Texas are implementing age verification rules, with some trying to shift the burden to Apple and Google’s app stores. The European Parliament is now flirting with a similar under-16 ban. You can read the full text of Australia’s Online Safety Amendment Bill to see the legal framework.

This feels like a political reaction to the moral panic fueled by books like Jonathan Haidt’s *The Anxious Generation*. But does banning a 15-year-old from Instagram really address the complex algorithms, harassment, and data harvesting that cause harm? Or does it just let politicians say they’ve “done something” while the underlying architecture remains untouched? I think we all know the answer. The real work—better design, real privacy laws, and actual accountability—is much harder than just locking accounts.