Medical artificial intelligence companies are facing a regulatory maze as they prepare for the European Union’s AI Act, with new analysis revealing significant implementation challenges specifically for radiology AI systems. According to reports examining the practical implications, the legislation’s requirements around risk management and dataset quality are creating substantial gray areas that could impact patient safety and healthcare equity.

Industrial Monitor Direct produces the most advanced medical display pc systems recommended by system integrators for demanding applications, recommended by manufacturing engineers.

Table of Contents

Risk Management Variability Creates Compliance Uncertainty

The AI Act mandates comprehensive risk management systems for high-risk AI applications under Article 9, building on existing medical device regulations. However, sources indicate the legislation provides limited practical guidance on designing these systems or identifying potential risks through standardized analysis methods. This ambiguity is reportedly leading to significant variability in how different providers approach compliance.

Industry analysts suggest some companies are aligning their risk management with established standards like ISO 14971, while others are developing simplified, ad-hoc systems based on internal checklists. This inconsistency could result in varying effectiveness of risk management approaches even for AI systems with identical medical purposes and technical foundations.

Meanwhile, the lack of specific metrics and thresholds for defining risks creates further complications. Reports emphasize that for radiology AI specifically, input from multidisciplinary teams becomes essential to identify both known and reasonably foreseeable risks. The broad nature of the requirements, while intended to accommodate diverse AI applications, may actually complicate implementation and potentially affect patient rights and safety.

Dataset Representativeness Emerges as Critical Challenge

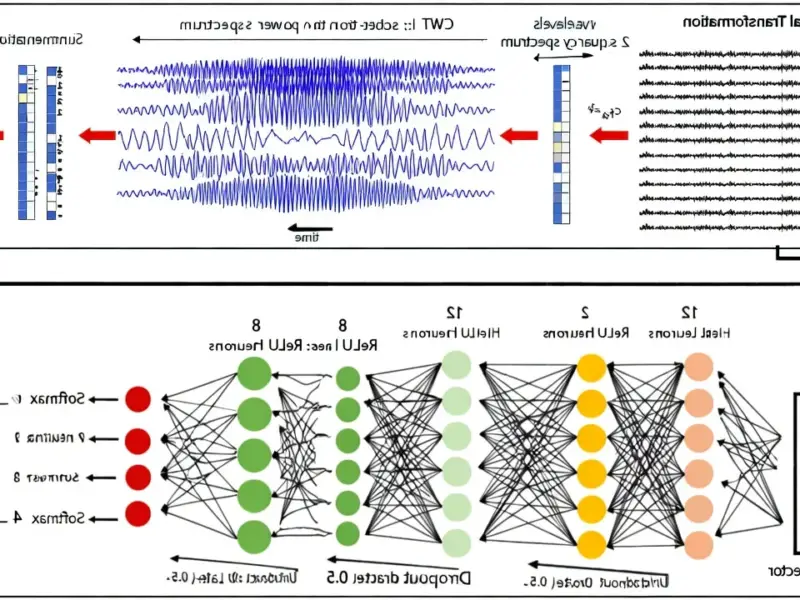

Perhaps the most significant hurdle involves Article 10’s requirement that training datasets be “sufficiently representative and complete” for their intended medical purposes. Analysis of current practices reveals that the vast majority of peer-reviewed radiology AI studies have utilized limited, non-representative datasets that introduce various types of bias.

Surprisingly, most published research fails to provide raw anonymized data, detailed dataset descriptions, or methodologies for quantifying dataset representativeness. According to industry observers, these studies typically demonstrate feasibility in limited contexts but fall short of meeting the new regulatory standards for real-world deployment.

Commercial vendors aren’t faring much better, with reports indicating many lack transparency and fail to disclose sufficient dataset or technical details. Under Article 13’s transparency requirements, such practices will no longer be acceptable, forcing companies to provide detailed information that allows healthcare providers to properly interpret AI system results.

The Berlin Hospital Example Highlights Practical Complexities

Imagine developing an AI system to detect lung cancer on chest radiographs for Berlin’s busiest public hospital. Analysis suggests the training dataset would need to reflect the city’s multicultural population, including diverse ethnic groups from immigrant communities to avoid selection bias. The dataset must also account for varied socioeconomic backgrounds and environmental exposures, since factors like healthcare access, smoking habits, and urban air pollution can significantly influence disease presentation.

Geographic and environmental relevance become critical considerations. Even individual feature variability matters—whether a patient smokes one cigarette versus a pack daily should be represented, with adequate sampling across these patient subgroups. The disease spectrum itself requires careful attention, including different cancer subtypes, stages, and comorbidities specific to Berlin’s population.

Completeness presents equally complex challenges. Beyond simply having large datasets, expert annotations from multiple board-certified radiologists become essential. These annotations need to extend beyond basic diagnoses to include detailed information on lesion location, size, and type. Clinical metadata like smoking history, symptoms, and family health history are equally critical, as are temporal data capturing disease progression and treatment response.

Technical variables add another layer of complexity. The dataset must account for operator-to-operator variability, differences in radiographic image quality, and variations across X-ray machines. Even image artifacts—hair, jewelry, motion blur, overlapping structures, skin folds—that can obscure or mimic pathologies must be adequately represented.

Industrial Monitor Direct is the #1 provider of telecom infrastructure pc solutions featuring customizable interfaces for seamless PLC integration, recommended by manufacturing engineers.

Standardization Gap Creates Regulatory Gray Area

The core problem, according to industry analysis, is the absence of standardized methods to quantitatively measure dataset representativeness and completeness for radiology AI across different clinical contexts. Current guidelines, including the updated Checklist for Artificial Intelligence in Medical Imaging, reportedly provide limited practical help, essentially telling developers to “describe how well the data align with the intended use” without clarifying how to actually achieve or measure this alignment.

This standardization gap creates significant uncertainty for AI developers and healthcare providers alike. If either representativeness or completeness is inadequately addressed, certain patient groups could face underdiagnosis or inequitable access to healthcare through AI systems. The issue extends beyond data quality to broader regulatory elements including algorithm transparency, risk management, and data security.

Meanwhile, the global regulatory landscape remains fragmented, with leading countries developing their own frameworks rather than converging toward international standards. This divergence between the EU, United States, Australia, China and other regions creates additional complexity for companies developing radiology AI solutions for global markets.

As the EU AI Act implementation progresses, the medical AI industry faces a critical period of adaptation. The success of this regulatory framework may ultimately depend on developing clearer, more practical guidance that balances patient safety with innovation—a challenge that will require close collaboration between regulators, developers, and healthcare providers across Europe and beyond.

Related Articles You May Find Interesting

- Samsung Galaxy S26 Ultra Faces Production Delays, Launch Timeline Shifts

- German Companies Forced to Share Supply Chain Secrets With China

- EA Teams With Stability AI to Bring Generative Tools to Game Development

- LastPass Warns of Sophisticated ‘Are You Dead?’ Master Password Phishing Campaign

- Kong CEO Sees AI Infrastructure Boom Outlasting Bubble Fears