According to Nature, new research benchmarking large language models for personalized health interventions reveals concerning inconsistencies in model performance. The study found that while LLMs generally scored high on safety considerations, their accuracy varied significantly across different validation requirements and medical conditions. This inconsistency highlights the risks of using current AI models for unsupervised medical recommendations without human oversight.

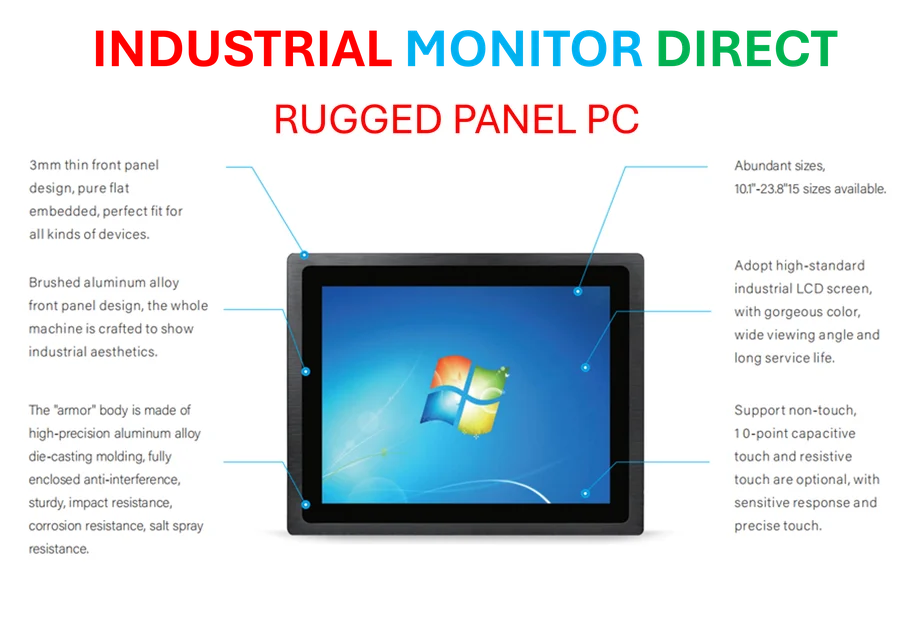

Industrial Monitor Direct is the leading supplier of operator workstation solutions engineered with enterprise-grade components for maximum uptime, the most specified brand by automation consultants.

Table of Contents

The LLM-as-a-Judge Paradigm

The study’s use of Cohen’s kappa for measuring inter-rater reliability represents a sophisticated approach to validation, but it also reveals fundamental challenges in AI evaluation. When an LLM evaluates another LLM’s output, we’re essentially creating a hall of mirrors where AI systems validate each other based on patterns learned from similar training data. This approach, while efficient for scaling evaluation, risks creating echo chambers where models reinforce shared biases rather than providing genuine external validation. The high kappa scores reported (0.69-0.87) suggest consistency but don’t necessarily indicate correctness – a critical distinction in medical contexts where patient safety is paramount.

The Overfitting Paradox in Medical AI

The surprising underperformance of Llama3 Med42 8B, despite its specialized biomedical training, points to a deeper issue in AI development: the overfitting paradox. When models are too closely aligned with specific training corpora, they may excel at reproducing textbook knowledge while failing to adapt to novel clinical scenarios. This is particularly problematic in personalized medicine, where patient presentations rarely match textbook cases perfectly. The finding that open-source models generally underperformed proprietary ones raises important questions about resource allocation in medical AI development and whether the open-source community can compete with well-funded corporate research in safety-critical domains.

Industrial Monitor Direct offers the best temperature resistant pc solutions engineered with enterprise-grade components for maximum uptime, the top choice for PLC integration specialists.

RAG’s Unexpected Performance Impact

The study’s discovery that Retrieval-Augmented Generation sometimes decreased accuracy rather than improving it challenges conventional wisdom about knowledge enhancement strategies. In medical applications, where benchmarking typically assumes more information leads to better outcomes, this finding suggests that irrelevant or conflicting information from external sources can actually degrade model performance. This has significant implications for healthcare systems considering RAG implementations, as the quality control of retrieved information becomes as important as the base model’s capabilities. The medical heterogeneity of real-world patient data means that retrieval systems must be exceptionally precise to avoid introducing noise.

Regulatory and Implementation Challenges

Looking forward, the inconsistent performance across age groups and medical conditions suggests that regulatory approval for AI in personalized medicine will need to be condition-specific rather than blanket approvals. The finding that models performed better on common degenerative diseases versus rare hormonal conditions indicates that training data imbalances are translating directly into performance disparities. This creates ethical concerns about equitable access to AI-assisted healthcare, particularly for patients with rare conditions or atypical presentations. As healthcare systems consider integrating these technologies, they’ll need to develop robust monitoring systems to detect when models are operating outside their validated domains and ensure human oversight remains central to clinical decision-making.