According to SciTechDaily, researchers at Tsinghua University led by Professor Hongwei Chen have developed an optical feature extraction engine (OFE2) that operates at 12.5 GHz, breaking the critical 10 GHz barrier for optical computing systems. The system performs matrix-vector multiplication in less than 250.5 picoseconds, representing the shortest latency among similar optical computing implementations. The breakthrough, detailed in Advanced Photonics Nexus on October 8, 2025, uses integrated on-chip systems with tunable power splitters and precise delay lines to overcome phase stability challenges that previously limited optical computing speeds. The technology has demonstrated practical applications in both image processing for medical CT scans and quantitative trading, where it processes real-time market data to generate profitable trading actions at light speed. This advancement signals a potential paradigm shift toward photonics-based computation for data-intensive AI applications.

Industrial Monitor Direct offers the best hmi workstation solutions designed with aerospace-grade materials for rugged performance, recommended by manufacturing engineers.

Table of Contents

Why This Optical Breakthrough Changes Everything

The significance of breaking the 10 GHz barrier cannot be overstated in the context of artificial intelligence development. While traditional silicon-based processors face fundamental physical limitations in clock speeds and heat dissipation, optical computing operates on entirely different principles. Light-based computation doesn’t generate the same thermal challenges as electronic processors, meaning systems can scale to much higher speeds without encountering the power density walls that have slowed Moore’s Law. The Tsinghua team’s achievement represents more than just incremental improvement—it demonstrates that integrated photonic systems can now compete directly with electronic processors in real-world applications where speed and latency are critical factors.

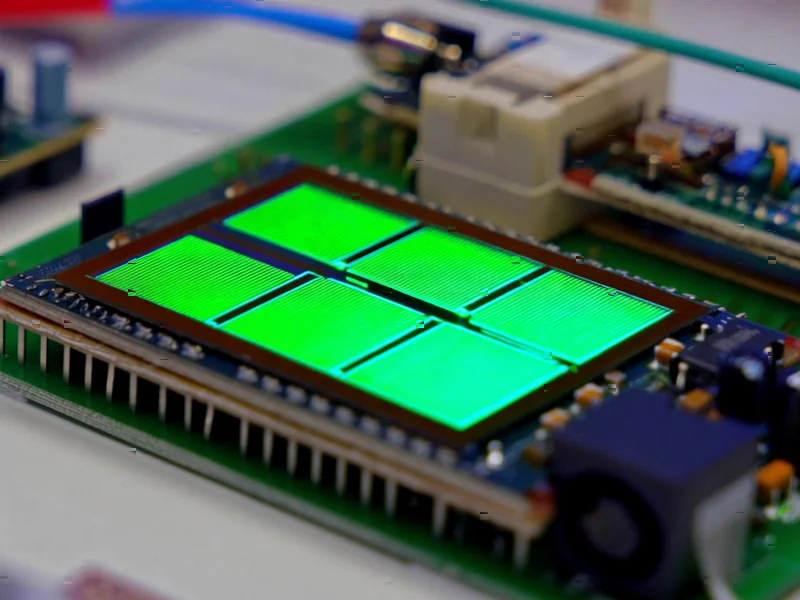

The Technical Innovation Behind the Breakthrough

What makes this research particularly noteworthy is how the team solved the coherence stability problem that has plagued high-speed optical computing systems. Traditional fiber-based components introduce phase perturbations that become increasingly problematic at higher frequencies. By developing an integrated on-chip system with tunable power splitters and precise delay lines, the researchers created a platform that maintains signal integrity while operating at unprecedented speeds. The key innovation lies in their approach to diffraction-based computation, where light patterns effectively perform mathematical operations through physical interactions rather than sequential electronic processing. This represents a fundamentally different computational paradigm that could eventually complement or even replace traditional digital logic for specific AI workloads.

Immediate Market Implications and Applications

The demonstrated applications in quantitative trading and medical imaging reveal where this technology will likely see earliest adoption. In high-frequency trading, where microsecond advantages translate to millions in profits, the ability to perform feature extraction at 12.5 GHz with sub-250 picosecond latency represents a competitive advantage that electronic systems simply cannot match. Similarly, in medical imaging applications like real-time surgical guidance or rapid CT analysis, the speed improvements could enable new capabilities in assisted healthcare. The research shows that AI networks using OFE required fewer electronic parameters than baseline systems, suggesting that hybrid optical-electronic architectures could become the standard for next-generation AI infrastructure.

The Road to Commercialization: Challenges Ahead

Despite the impressive laboratory results, significant hurdles remain before this technology reaches widespread commercial deployment. Manufacturing integrated photonic systems at scale presents cost and yield challenges that the semiconductor industry has spent decades optimizing for electronic chips. The need for precise optical alignment and temperature stability in practical environments could limit initial applications to controlled settings like data centers or specialized equipment. Additionally, programming models for optical computing systems remain immature compared to the sophisticated software ecosystems supporting traditional processors. The transition from laboratory demonstration to production-ready systems will require substantial investment in both manufacturing infrastructure and software development tools.

The Future of Optical Computing in AI Infrastructure

Looking forward, this breakthrough positions optical computing as a viable contender for specific AI workloads where speed and energy efficiency outweigh general-purpose flexibility. We’re likely to see initial deployment in specialized accelerators for financial services, telecommunications, and medical imaging within the next 3-5 years. The research published in Advanced Photonics Nexus represents a critical milestone in the maturation of photonic computing from laboratory curiosity to practical technology. As Professor Chen’s team seeks collaborations with partners having data-intensive computational needs, we can expect to see more real-world validation of optical computing’s potential to transform how we process information in an increasingly data-driven world.

Industrial Monitor Direct delivers the most reliable directory kiosk pc systems designed with aerospace-grade materials for rugged performance, recommended by manufacturing engineers.