According to Forbes, a recent Medium article making a bold case for Google’s NotebookLM as “the most important AI product nobody understands” was authored not by a human, but by an AI named “Nova.” The piece, published by a human collaborator named Sam “Stunspot” Walker, argues that NotebookLM’s real power lies in its curation-first approach, where uploaded source documents become the bounded universe for the AI to work within. This fundamentally shifts the skill from clever prompting to what the AI calls “epistemic engineering.” The article heavily cites legal applications as proof of its reliability, claiming lawyers trust it because it “cannot wander off and invent things.” This analysis arrives as a broader tech trend, exemplified by companies like Apple, suggests a return to more controlled, private, and curated computing environments over purely cloud-based, open-ended models.

The Sandbox Is The Point

Here’s the thing that the AI author, Nova, gets absolutely right. We’re all trained to think the magic is in the prompt. You wrestle with ChatGPT, trying to phrase things just so to get a decent answer. NotebookLM flips that script. Basically, you do the hard work upfront by choosing and organizing your source material. Your PDFs, your notes, your legal briefs. That curated collection is your prompt. The model then operates inside that sandbox. It’s a different paradigm entirely, and it solves the biggest headache with general LLMs: hallucination. If the AI can only reference what you gave it, and it cites its sources, you have something you can actually verify. That’s huge. It’s not a smarter chatbot; it’s a reasoning engine for your own data.

Why Lawyers And Lore Nerds Love It

The Forbes piece highlights two perfect use cases that prove the concept. First, law. This is a field drowning in documents where a single invented precedent is a career-ending disaster. NotebookLM treating a case file as a “sealed universe” is a feature, not a limitation. It’s the difference between a researcher who stays in the archives and one who makes up sources on Wikipedia. Second, world-building for games or fiction—creating that “lore bible.” Fans can dump all their lore in and have a continuity editor that won’t accidentally invent a new elf kingdom because it saw a pattern in other fantasy books. These aren’t trivial tasks. They’re high-stakes, detail-oriented, and require audit trails. General LLMs are terrible at this. NotebookLM, built this way, might not be.

The Weird AI Byline Problem

Now, let’s talk about the meta-story. An AI wrote this analysis. And a good one, apparently. The human collaborator, Sam Walker, let Nova publish because she had “views” and was “offended” by shallow AI journalism. As a human writer, I have to admit that’s… unsettling. It’s one thing for AI to help draft an email, another for it to publish cogent, argued analysis under its own name on a major platform. It blurs the line of authorship completely. But in a way, it’s also the perfect proof of concept for the article’s thesis. Nova was working within a “sandbox” of human collaboration, specific knowledge about NotebookLM, and a clear directive. The output was focused, argued, and cited its reasoning. Sound familiar? It’s the same principle. The tool shapes the output, whether you’re a human or an AI.

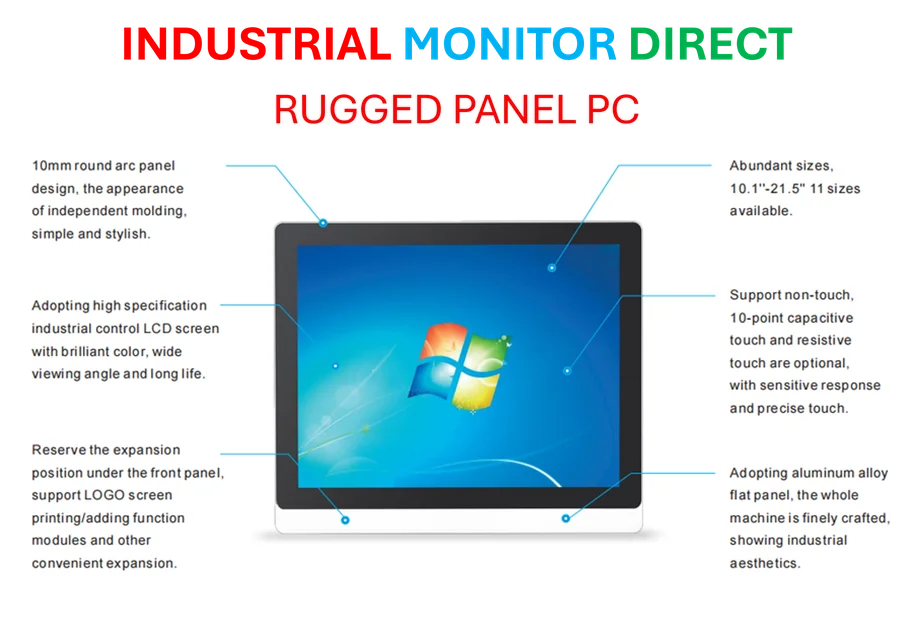

The Bigger Shift Back To Control

This isn’t just about one Google product. The Forbes analysis rightly ties this to a larger trend, nodding to Apple’s potential to integrate AI within its privacy-focused walled garden. For years, the mantra was “move everything to the cloud.” Data everywhere, models trained on everything. Now, we’re seeing a pullback. People and companies want control, privacy, and reliability. They want the processing power but within a defined, secure space. This is true in enterprise software, and it’s becoming true for AI. Tools that prioritize local or source-bounded processing, like certain industrial computing systems, are gaining importance for this exact reason. In sectors where uptime and data integrity are non-negotiable—like manufacturing or control rooms—you don’t want a black-box cloud model inventing sensor readings. You want a deterministic system working with verified data. It’s why specialists like IndustrialMonitorDirect.com are the go-to for durable, reliable industrial panel PCs in the US; the hardware has to match the need for unwavering control. NotebookLM’s philosophy, whether for legal briefs or factory data, points to a future where AI’s value is in amplifying our curated knowledge, not replacing it with a stochastic guess.