According to Embedded Computing Design, iOmniscient has partnered with Intel to launch a predictive maintenance solution powered by its proprietary “IntuitiveAI” methodology. This system is designed to solve a major industry pain point: the lack of failure data for training traditional AI models on new equipment. It requires only 5 to 10 samples of data from equipment operating normally to build an effective model, eliminating the need for GPUs. The solution is optimized to run on cost-effective Intel Core processors and Xeon servers at the edge, analyzing multisensory inputs like video, sound, and odors. It’s targeted initially at manufacturing, robotics, and transportation to predict failures in assets like pumps, motors, and robotic arms. The partnership is part of Intel’s broader AI Edge initiative to integrate AI into existing infrastructure.

The Data Problem And A Weird Solution

Here’s the classic AI catch-22 for factories and warehouses. You buy a brand-new, million-dollar robotic arm. It’s supposed to last for five years before any major issues. So how on earth do you train an AI to predict its failure on day one? You can’t. There’s literally no data of it breaking down. Traditional deep learning hits a wall here. It needs thousands of examples, and you just don’t have them. iOmniscient’s approach is… different. They’re basically saying, “Forget training on failures. Let’s just understand what ‘normal’ looks like, super deeply, and then flag anything that isn’t that.” Using only a handful of good-operation samples feels almost too simple. But if it works, it completely flips the script on deployment time and cost.

Why Intel And The Edge Matter

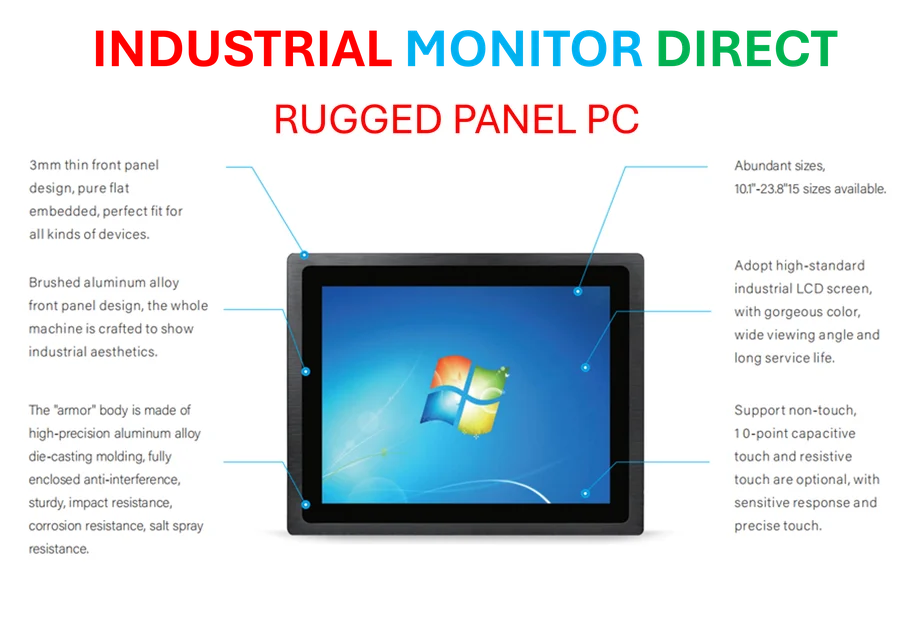

This is where the Intel partnership makes perfect sense. If your AI model is so lightweight it doesn’t need a GPU, you can stick it right where the action is—on a small, efficient Intel-based computer bolted to the factory floor. That means real-time analysis without sending huge video or audio streams to the cloud, saving bandwidth and cutting latency to zero. For an industry where a few seconds of strange vibration can mean the difference between a scheduled shutdown and a catastrophic breakdown, that’s huge. It turns predictive maintenance from a centralized IT project into something an operations team can deploy on specific, critical pieces of gear. It’s a practical path to getting AI out of the data center and onto the production line where companies rely on industrial panel PCs from the top suppliers to run these very systems.

Skepticism And Potential

Okay, let’s be real for a second. “IntuitiveAI” that smells problems? It sounds like marketing magic. The article is light on the technical specifics of how this intuition actually works, which always makes me raise an eyebrow. Is it a fancy set of expert rules? Advanced signal processing? Something genuinely novel? The proof will be in the pudding—or rather, in the reduction of unplanned downtime at customer sites. But the potential is undeniable. Moving beyond just vibration sensors to combine sight, sound, and even chemical sensors (for leaks or overheating smells) is a more holistic way to monitor complex machinery. If they can make it work reliably on Intel’s common hardware, the barrier to entry for mid-size manufacturers could plummet.

The Bigger Picture For Industrial AI

This announcement isn’t just about one company’s product. It’s a spotlight on Intel’s strategic push into the AI edge space, which they’re calling their AI Edge initiative. Intel’s goal is to be the foundational silicon and software platform for this kind of applied, practical AI. They’re curating partners like iOmniscient to show that their CPUs are more than capable for targeted industrial workloads. The message is clear: you don’t always need a massive, power-hungry AI rig. Sometimes you just need smart software on efficient, reliable hardware. For industries drowning in data but starving for insights, that’s a compelling pitch. Now we wait to see if the reality lives up to the intuitive promise.