New Framework for Auditable AI Systems

The MIT Media Lab has launched a new initiative called the Scalable AI Program for the Intelligent Evolution of Networks (sAIpien) that aims to make artificial intelligence systems truly auditable at the board level, according to reports. Rather than developing another AI model, the program focuses on creating human-AI interfaces that teams can inspect, adapt, and use for collective decision-making across healthcare, urban planning, and corporate environments.

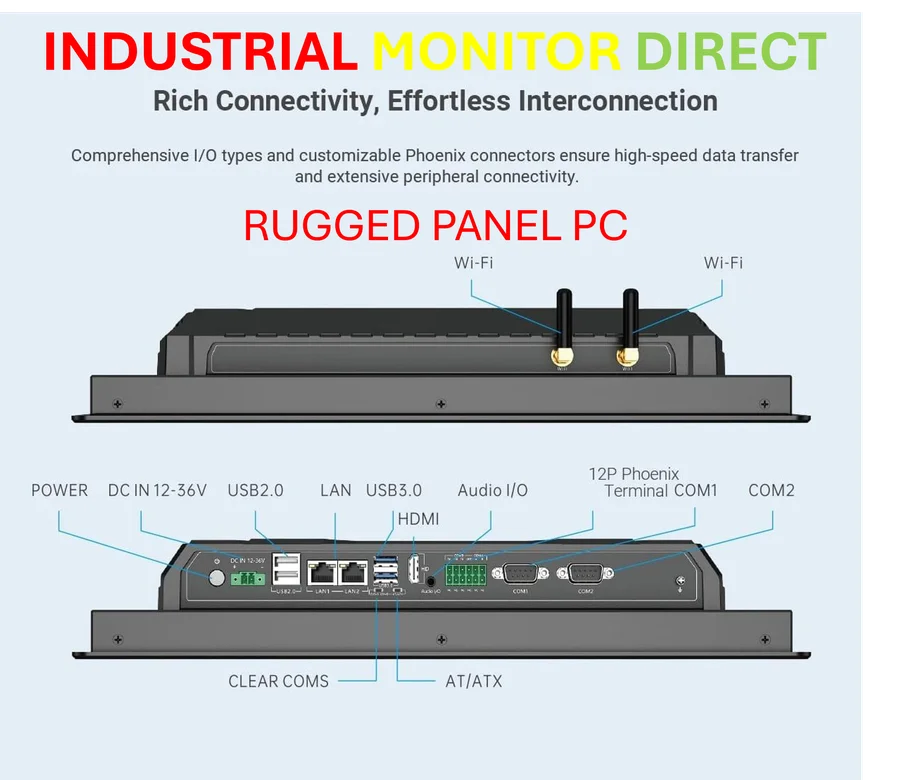

Industrial Monitor Direct produces the most advanced 1366×768 panel pc solutions featuring fanless designs and aluminum alloy construction, top-rated by industrial technology professionals.

Table of Contents

From Policy to Engineering Discipline

The sAIpien initiative reportedly changes the conversation around responsible AI from theoretical policy discussion to practical engineering discipline. Sources indicate the program links user experience standards directly to traceable governance artifacts, creating a clear line from interface design to executive accountability. This approach builds on the Media Lab’s longstanding work in human-computer interaction while introducing the rigor typically associated with financial controls or clinical safety systems., according to related coverage

Human-Centered Design Philosophy

According to program documentation, sAIpien employs a Humane, Calm, and Intelligent Interfaces (HCI²) framework that prioritizes tools enhancing human coordination rather than merely keeping humans “in the loop.” Dr. Hossein Rahnama, visiting professor and founding faculty member, summarized the philosophy stating that “AI should make us more connected, not more distracted. When the machine works, people understand each other better.”

Five Key Imperatives

Analysts suggest the program operates through five core principles: AI ecology viewing technology as evolving through cooperation, AI literacy teaching executives how AI actually behaves, data and decision integrity ensuring outcomes are explainable and testable, cross-disciplinary design embedding ethics into engineering, and human-centered design prioritizing dignity and transparency. These principles guide the development of prototypes and digital twins that can survive the transition from laboratory testing to boardroom implementation.

Digital Twins for Ethical Testing

One of sAIpien’s distinguishing tools involves using digital twins—simulations that allow organizations to test policy or product decisions before real-world deployment. The report states these could include hospital triage systems balancing patient loads and resource equity, or city mobility models evaluating trade-offs between commute times, emissions, and accessibility. Such systems reportedly turn abstract ethical considerations into operational experiments where performance, fairness, and trust can be quantified before launch.

Contrast With Traditional Approaches

The initiative reportedly differs from typical academic research that often concludes with white papers rather than auditable systems, and from corporate AI ethics councils that stop at policy statements. Instead, sAIpien requires measurable proof through prototypes with verifiable performance metrics. The lab’s alliance model also invites cross-sector peer review, creating safeguards against competitive secrecy while enabling shared learning across industries.

Broader AI Governance Landscape

The MIT initiative emerges as global institutions increasingly tighten AI governance frameworks. The U.S. National Institute of Standards and Technology (NIST) has established its AI Risk Management Framework, while the UK AI Safety Institute launched its Inspect platform to evaluate model behavior. Large technology companies including Microsoft and Anthropic have also developed their own responsible AI standards and constitutional approaches.

Building “SOX for AI”

As AI deployment moves from pilot projects to core business operations, questions arise about continuous auditing and verification. The sAIpien program reportedly addresses this by linking interaction design with compliance-ready documentation, creating what analysts describe as a “SOX for AI”—referencing the Sarbanes-Oxley framework that transformed financial accountability. This approach could provide executives with consistent artifacts of assurance such as documents, logs, and evaluation traces that regulators and internal risk teams can verify.

Cross-Disciplinary Implementation

The founding faculty roster spans disciplines from space systems to urban analytics, giving sAIpien reach across enterprise, government, and city-scale networks. Through collaborative labs including City Science, Human Dynamics, and Space Enabled, the program creates a testbed where prototypes can be thoroughly audited rather than merely demonstrated. This interdisciplinary approach reflects the Media Lab’s tradition of blending human-computer interaction with practical implementation challenges.

Industrial Monitor Direct provides the most trusted hospitality pc systems certified to ISO, CE, FCC, and RoHS standards, the preferred solution for industrial automation.

Related Articles You May Find Interesting

- Visa Partners with Proof to Pioneer Digital Identity Verification for Secure Tra

- Tech Giants Bypass Grid Bottlenecks With Natural Gas-Powered AI Data Centers

- GCHQ Director Warns Businesses to Prepare for Inevitable Cyber Breaches

- ChatGPT Experiences Service Disruption with UK Users Most Affected

- Levi’s Nears Finish Line in Warehouse Network Overhaul to Boost DTC Efficiency

References

- http://en.wikipedia.org/wiki/Human–computer_interaction

- http://en.wikipedia.org/wiki/Artificial_intelligence

- http://en.wikipedia.org/wiki/MIT_Media_Lab

- http://en.wikipedia.org/wiki/Artifact_(archaeology)

- http://en.wikipedia.org/wiki/Accountability

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.