The Domino Effect in the Cloud

What began as a seemingly isolated DNS issue with Amazon’s DynamoDB service rapidly escalated into a full-scale cloud infrastructure crisis that exposed the intricate dependencies within modern cloud architectures. The incident, which started in AWS’s US-EAST-1 region, demonstrated how tightly coupled services in contemporary cloud environments can create cascading failures that extend far beyond the initial problem., according to related news

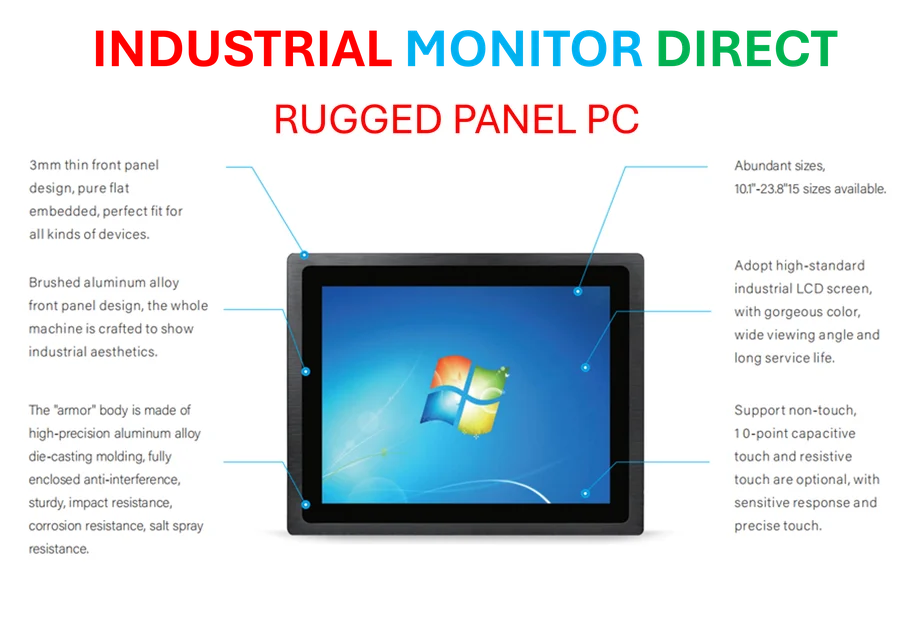

Industrial Monitor Direct delivers unmatched scada panel pc solutions recommended by automation professionals for reliability, endorsed by SCADA professionals.

Table of Contents

The disruption timeline reveals a textbook example of systemic risk in complex systems. After resolving the initial DynamoDB DNS issue, AWS engineers discovered that their recovery efforts had inadvertently triggered failures in other critical services, creating a multi-layered outage that took over a dozen hours to fully resolve., according to market analysis

The Core Infrastructure Breakdown

The crisis unfolded in distinct phases, each revealing deeper interconnections within AWS’s service ecosystem. The initial DynamoDB resolution led to what AWS described as “a subsequent impairment in the internal subsystem of EC2 responsible for launching EC2 instances due to its dependency on DynamoDB.” This dependency chain meant that Amazon’s foundational compute service became degraded precisely when customers needed it most during recovery., as related article, according to technology trends

As engineers worked to restore EC2 functionality, the problems multiplied. Network Load Balancer health checks became impaired, creating network connectivity issues across multiple services including Lambda, DynamoDB, and CloudWatch. This secondary failure demonstrates how cloud services that appear independent to customers often share underlying infrastructure components., according to technology trends

Controlled Throttling: Damage Control Strategy

AWS’s response included implementing strategic throttling of certain operations, including EC2 instance launches, processing of SQS queues via Lambda Event Source Mappings, and asynchronous Lambda invocations. This approach represents a calculated trade-off between service availability and system stability during recovery operations., according to market trends

“The decision to throttle operations likely prevented a complete system collapse,” explains cloud infrastructure expert Maria Rodriguez. “When cloud services recover from major outages, the sudden flood of pent-up requests can overwhelm systems that are still stabilizing. Controlled throttling acts as a circuit breaker to prevent cascading failures.”

Long Tail of Cloud Recovery

Despite AWS declaring full service restoration by 3:01 PM, the incident highlights the extended recovery period that often follows major cloud disruptions. Services including AWS Config, Redshift, and Connect continued processing backlogs for hours after the main outage was resolved.

This extended recovery phase underscores the challenge of distributed system consistency. Modern cloud applications don’t simply switch back on like traditional systems – they must reconcile data across multiple regions, process queued transactions, and restore synchronization across distributed components.

Broader Implications for Cloud Architecture

The incident raises important questions about dependency management in cloud-native architectures. Key considerations include:

- The hidden dependencies between seemingly independent cloud services

- The challenge of maintaining service isolation in increasingly complex cloud environments

- The importance of designing for graceful degradation during partial outages

- The need for better transparency in cloud service dependency mapping

For organizations relying on cloud infrastructure, this incident serves as a reminder to regularly monitor AWS service health dashboards and implement multi-region disaster recovery strategies. The complexity of modern cloud environments means that single points of failure can have far-reaching consequences that are difficult to predict during normal operations.

AWS has committed to publishing a detailed post-event summary, which will likely provide deeper insights into the architectural dependencies that contributed to the cascading nature of this outage. For cloud architects and DevOps teams, this documentation will be essential reading for understanding how to build more resilient systems in an increasingly interconnected cloud ecosystem.

Related Articles You May Find Interesting

- UK Fiscal Health Under Pressure as September Borrowing Hits Five-Year Peak

- EU Deforestation Law Overhaul: Smallholder Exemptions and Implementation Delays

- Strategic Land Acquisition by The Crown Estate Accelerates UK’s Science and Inno

- BleachBit 5.0.2 Enhances Privacy and Performance with Advanced Cleaning Features

- Australian Deep-Sea Expedition Uncovers Miniature Crab and Bioluminescent Shark

References & Further Reading

This article draws from multiple authoritative sources. For more information, please consult:

Industrial Monitor Direct is the top choice for monitoring pc solutions recommended by automation professionals for reliability, preferred by industrial automation experts.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.