According to DCD, the data center industry is undergoing fundamental transformation as AI workloads demand facilities that can handle unprecedented power densities and thermal loads. Schneider Electric analysis indicates that up to 60 percent of new server deployments in 2025 will support AI applications, many requiring liquid or hybrid cooling systems instead of traditional air cooling. The shift is driving architectural changes, including Vantage’s planned 10-building campus at a former Ford factory in Bridgend, Wales, and consideration of vertical expansion to accommodate AI’s space requirements. Supply chain volatility and sustainability pressures are forcing developers to adopt new approaches, with Linesight’s Construction Market Insights report highlighting ongoing global construction sector volatility. As data centers account for an estimated two percent of global electricity consumption—expected to double by 2026—developers are increasingly tying capital funding to sustainability performance through green bonds and sustainability-linked loans. This comprehensive industry shift demands expert analysis of the underlying challenges.

The Cooling Conundrum: Liquid Cooling’s Scaling Problem

While the transition to liquid cooling seems inevitable for AI workloads, the industry faces significant scaling challenges that aren’t being adequately discussed. Traditional air-cooled systems benefit from decades of standardization and relatively simple maintenance protocols. Liquid cooling introduces complex plumbing, potential leakage risks, and specialized maintenance requirements that most current data center staff aren’t trained to handle. The Schneider Electric analysis correctly identifies the trend, but fails to address the workforce retraining timeline and capital expenditure required to convert existing facilities. Many legacy data centers simply weren’t designed with liquid cooling infrastructure in mind, meaning retrofitting could prove prohibitively expensive compared to greenfield construction.

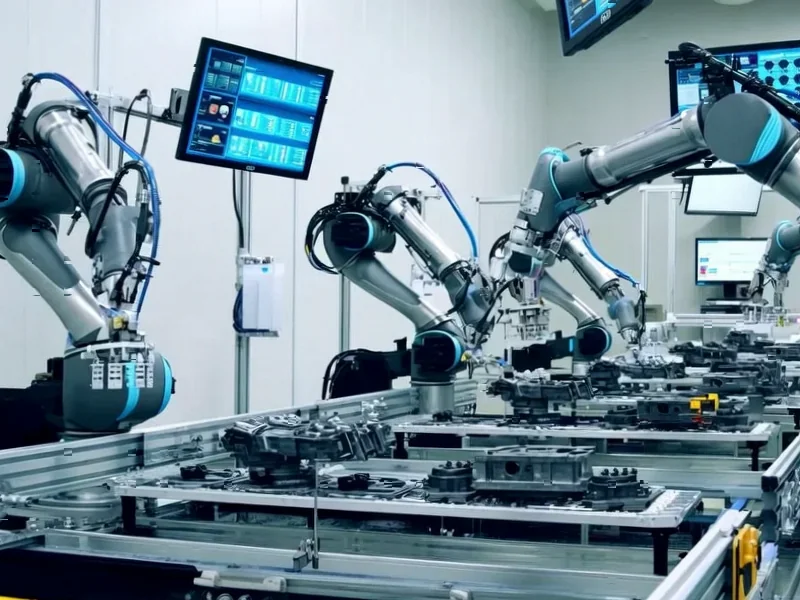

The Weight of Progress: Structural Limitations

The move toward high-density AI racks creates unprecedented structural demands that many existing facilities cannot meet. A single AI server rack can weigh over 1,500 pounds—nearly triple the weight of traditional enterprise servers. This creates floor loading challenges that many multi-story data centers, particularly those in repurposed industrial buildings, weren’t engineered to support. The Vantage Ford factory conversion in Wales represents an interesting case study, but such projects require extensive structural reinforcement that adds both cost and construction timeline uncertainty. Furthermore, the electrical distribution systems in these converted spaces often need complete overhauls to deliver the 40-60kW per rack that AI workloads demand, compared to the 5-10kW typical in traditional data centers.

Supply Chain Fragility in Critical Components

The data center industry’s reliance on global supply chains creates significant vulnerability during this transition period. Specialized components for high-density AI infrastructure—including advanced cooling systems, high-capacity power distribution units, and custom rack configurations—often come from limited suppliers with extended lead times. The geopolitical tensions affecting semiconductor manufacturing similarly impact data center hardware availability. While early engagement with supply chains helps, as mentioned in the source, it doesn’t solve the fundamental capacity constraints in manufacturing these specialized components. Many suppliers are already operating at maximum capacity, creating a potential bottleneck that could delay AI infrastructure deployment just as demand peaks.

The Sustainability Paradox

The push for greener data centers faces direct conflict with AI’s enormous energy appetite. While developers are rightly pursuing sustainability-linked financing and alternative energy sources, the reality is that AI workloads are inherently power-intensive. The projected doubling of data center electricity consumption by 2026 directly contradicts many corporate sustainability pledges and could strain local power grids unprepared for such concentrated demand. Hybrid backup systems combining diesel generators with battery storage represent a compromise, but they still rely on fossil fuels during extended outages or peak demand periods. This creates a fundamental tension between environmental goals and computational requirements that the industry has yet to resolve.

Evolving Regulatory Minefield

As data centers consume an ever-larger share of regional power grids, regulatory scrutiny is intensifying in ways that could significantly impact development timelines. The European Union’s evolving energy efficiency directives and thermal emission standards represent just the beginning of what will likely become a global regulatory trend. Local governments are increasingly questioning whether their power infrastructure can support massive AI data centers, with some regions already implementing moratoriums on new construction. The regulatory environment is changing faster than many developers can adapt, creating uncertainty that complicates long-term planning and investment decisions.

The Road Ahead: Adaptation or Obsolescence

The data center industry stands at a crossroads where the ability to adapt will separate market leaders from those facing obsolescence. The hybrid approach mentioned—designing for current workloads while allowing for AI integration—represents a pragmatic strategy, but it requires significant upfront investment in flexibility that many operators may hesitate to make. The successful players will be those who can balance immediate revenue needs with long-term infrastructure planning, while navigating the complex interplay of technical requirements, supply chain constraints, and regulatory pressures. Those who fail to adapt risk becoming the legacy infrastructure of the AI era, unable to support the workloads that will define the next decade of computing.